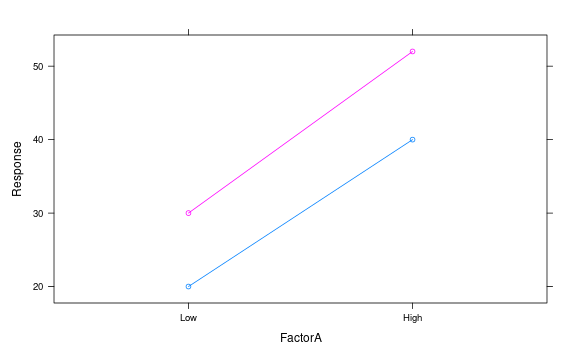

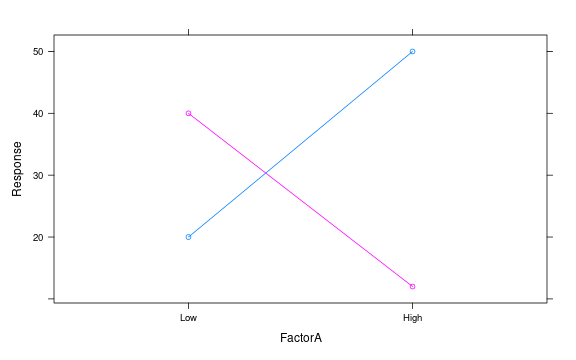

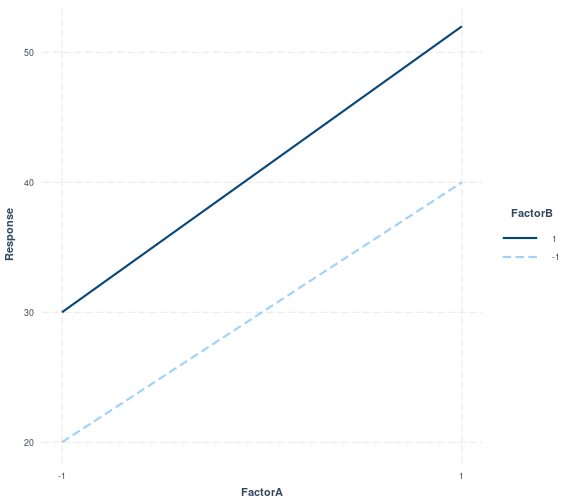

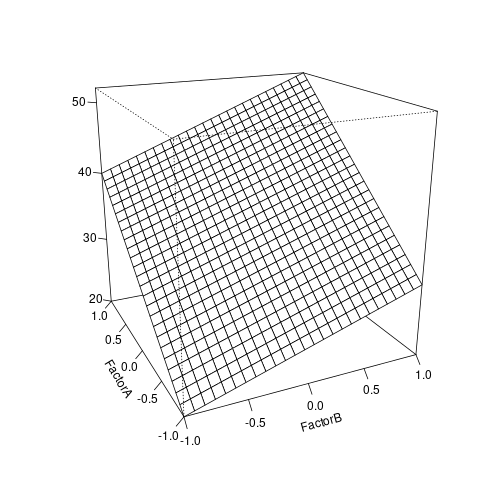

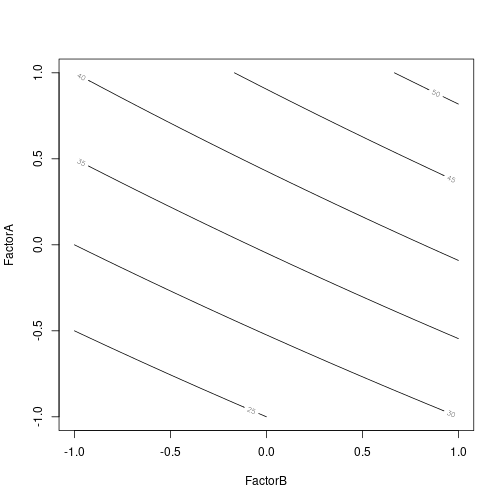

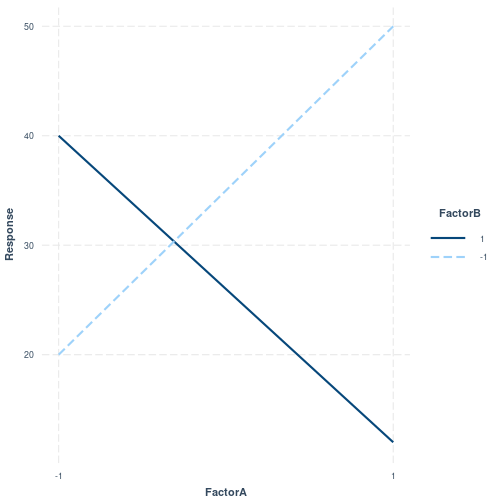

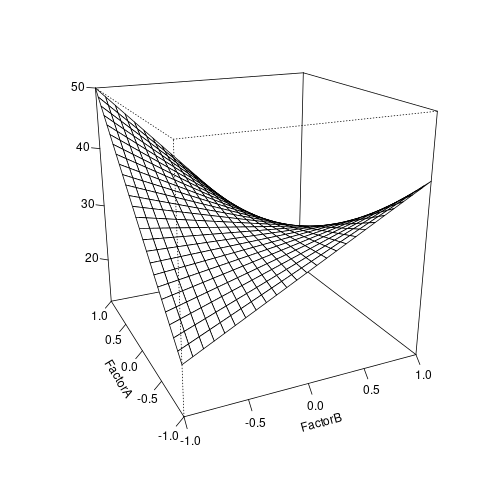

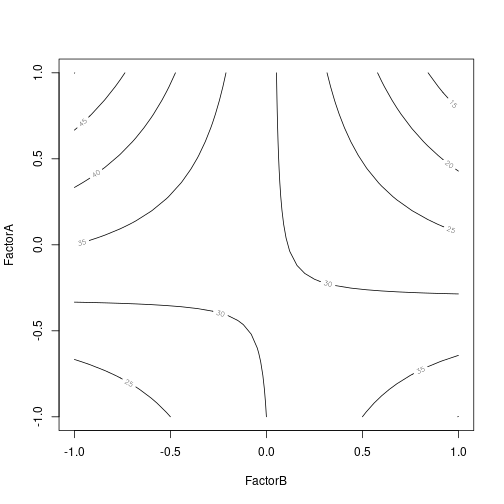

class: center, middle, inverse, title-slide .title[ # Design and Analysis of Experiments Using R ] .subtitle[ ## Factorial Design ] .author[ ### Olga Lyashevska ] .date[ ### 2022-10-16 ] --- # Factorial design - Basic definitions and principles - The advantages - The two-factor factorial design - The general factorial design --- # Learning Objectives 1. Learn the definitions of main effects and interactions. 2. Learn about two-factor factorial experiments. 3. Learn how the analysis of variance can be extended to factorial experiments. 4. Know how to check model assumptions in a factorial experiment. 5. Understand how sample size decisions can be evaluated for factorial experiments. --- # Two factor factorial design Many experiments involve the study of the effects of two or more factors. In general, factorial designs are most efficient for this type of experiment. By a factorial design, we mean that in each complete trial or replicate of the experiment, all possible combinations of the levels of the factors are investigated. --- # Two factor factorial design For example, if there are *a* levels of factor *A* and *b* levels of factor *B*, each replicate contains all *ab* treatment combinations. When factors are arranged in a factorial design, they are often said to be *crossed*. --- # Factorial *a* by *b* design ```r rm(list=ls()) set.seed(1234) # Create all combinations of factor variables design<-expand.grid(FactorA =c("Low", "High"), FactorB = c("Low", "High")) design ``` ``` ## FactorA FactorB ## 1 Low Low ## 2 High Low ## 3 Low High ## 4 High High ``` This is unreplicated `\(2^2\)` factorial design --- # Replication ```r design<-rbind(design, design) design ``` ``` ## FactorA FactorB ## 1 Low Low ## 2 High Low ## 3 Low High ## 4 High High ## 5 Low Low ## 6 High Low ## 7 Low High ## 8 High High ``` --- # Randomisation ```r design <- design[order(sample(1:nrow(design))), ] design ``` ``` ## FactorA FactorB ## 7 Low High ## 2 High Low ## 6 High Low ## 1 Low Low ## 4 High High ## 3 Low High ## 8 High High ## 5 Low Low ``` --- # Add response ```r design$Response<-rep(c(20, 40, 30, 52),2) design ``` ``` ## FactorA FactorB Response ## 7 Low High 20 ## 2 High Low 40 ## 6 High Low 30 ## 1 Low Low 52 ## 4 High High 20 ## 3 Low High 40 ## 8 High High 30 ## 5 Low Low 52 ``` --- # Main effect The effect of a factor is defined to be the change in response produced by a change in the level of the factor. This is frequently called a *main effect* because it refers to the primary factors of interest in the experiment. --- # Factorial experiment without interaction <img src="figs/factorial-design1.png" style="width: 80%"/> --- # Main effect The main effect of factor A in this two-level design can be thought of as the difference between the average response at the low level of A and the average response at the high level of A. Numerically, this is `$$A=\frac{40+52}{2}-\frac{20+30}{2}=21$$` That is, increasing factor A from the low level to the high level causes an average response increase of 21 units. --- # Main effect Similarly, the main effect of B is `$$B=\frac{30+52}{2}-\frac{20+40}{2}=11$$` Increasing factor B from the low level to the high level causes an average response increase of 11 units. --- # Note If the factors appear at more than two levels, the above procedure must be modified because there are other ways to define the effect of a factor. This point is discussed more completely later. --- # Factorial experiment with interaction In some experiments, we may find that the difference in response between the levels of one factor is not the same at all levels of the other factors. When this occurs, there is an *interaction* between the factors. --- # Factorial experiment with interaction <img src="figs/factorial-design2.png" style="width: 80%"/> --- At the low level of factor B, the A effect is `$$A=50-20=30$$` At the high level of factor B, the A effect is `$$A=12-40=-28$$` Because the effect of A depends on the level chosen for factor B, we see that there is interaction between A and B. --- # Interaction effect The magnitude of the *interaction effect* is the average difference in these two A effects, or `$$AB = \frac{(−28 − 30)}{2} = −29$$` --- # A factorial design without interaction Plot the response data against factor A for both levels of factor B. <img src="figs/factorial-design3.png" style="width: 70%"/> B− and B+ lines are approximately parallel, indicating a lack of interaction between factors A and B. --- # A factorial design with interaction Plot the response data with interaction. <img src="figs/factorial-design4.png" style="width: 70%"/> B− and B+ lines are not parallel indicating an interaction between factors A and B. --- ```r design1<-expand.grid(FactorA =c("Low", "High"), FactorB = c("Low", "High")) design1$Response <-c(20, 40, 30, 52) design1 ``` ``` ## FactorA FactorB Response ## 1 Low Low 20 ## 2 High Low 40 ## 3 Low High 30 ## 4 High High 52 ``` --- # [lattice](https://cran.r-project.org/web/packages/lattice/index.html) package ```r # install.packages("lattice") # ?lattice::xyplot library(lattice) xyplot(Response~FactorA, groups = FactorB, type="b", data=design1) ``` <!-- --> --- ```r design2<-expand.grid(FactorA =c("Low", "High"), FactorB = c("Low", "High")) design2$Response <-c(20, 50, 40, 12) design2 ``` ``` ## FactorA FactorB Response ## 1 Low Low 20 ## 2 High Low 50 ## 3 Low High 40 ## 4 High High 12 ``` --- ```r xyplot(Response~FactorA, groups = FactorB, type="b", data=design2) ``` <!-- --> --- # Quantitative design factors Suppose that both of our design factors (Factor A, Factor B) are quantitative (such as temperature, pressure, time). Then a regression model representation of the two-factor factorial experiment could be written as `$$y=\beta{0}+\beta_{1}x_{1}+\beta_{2}x_{2}+\beta_{12}x_{1}x_{2}+\epsilon$$` where `\(y\)` is the response, the `\(\beta\)`’s are parameters whose values are to be determined, `\(x_{1}\)` is a variable that represents factor `\(A\)`, `\(x_{2}\)` is a variable that represents factor `\(B\)`, and `\(\epsilon\)` is a random error term. `\(x_{1}x_{2}\)` represents the interaction between `\(x_{1}\)` abd `\(x_{2}\)`. --- # No interaction design ```r design11<-expand.grid(FactorA =c(-1, 1), FactorB = c(-1, 1)) design11$Response <- c(20, 40, 30, 52) design11 ``` ``` ## FactorA FactorB Response ## 1 -1 -1 20 ## 2 1 -1 40 ## 3 -1 1 30 ## 4 1 1 52 ``` --- # Regression model ```r lm(Response~FactorA+FactorB+FactorA*FactorB, data=design11) ``` ``` Call: lm(formula = Response ~ FactorA + FactorB + FactorA * FactorB, data = design11) Coefficients: (Intercept) FactorA FactorB FactorA:FactorB 35.5 10.5 5.5 0.5 ``` --- # Regression model The parameter estimates in this regression model are related to the effect estimates. We found the main effects of A and B to be A = 21 and B = 11. The estimates of `\(\beta_{1}\)` and `\(\beta_{2}\)` are one-half the value of the corresponding main effect; `$$\beta_{1} = \frac{21}{2} = 10.5$$` `$$\beta_{2} = \frac{11}{2} = 5.5$$` --- # Regression model The interaction effect is AB = 1, so the value of interaction coefficient in the regression model is $$ \beta_{12} =\frac{1}{2}= 0.5$$ The parameter `\(\beta_{0}\)` is estimated by the average of all four responses: `$$\beta_{0} = \frac{(20 + 40 + 30 + 52)}{4} = 35.5$$`. --- # Regression model Therefore, the fitted regression model is `$$y=35.5+10.5x_{1}+5.5x_{2}+0.5x_{1}x_{2}$$` The interaction contribution to this experiment is negligible. --- # [interactions](https://cran.r-project.org/web/packages/interactions/index.html) package ```r # install.packages("interactions") library(interactions) fit<-lm(Response~FactorA+FactorB+FactorA*FactorB, data=design11) interact_plot(fit, pred = FactorA, modx = FactorB) ``` <!-- --> --- # The response surface ```r # install.packages("rsm") library(rsm) persp(fit, FactorA~FactorB) ``` <!-- --> --- # The contour plot ```r contour(fit, FactorA ~ FactorB, image = FALSE) ``` <!-- --> --- # Design with interaction Lets go back to our design with interaction. ```r design22<-expand.grid(FactorA =c(-1, 1), FactorB = c(-1, 1)) design22$Response <- c(20, 50, 40, 12) design22 ``` ``` ## FactorA FactorB Response ## 1 -1 -1 20 ## 2 1 -1 50 ## 3 -1 1 40 ## 4 1 1 12 ``` --- # Significant interaction effect ```r fit<-lm(Response~FactorA+FactorB+FactorA*FactorB, data=design22) interact_plot(fit, pred = FactorA, modx = FactorB) ``` <!-- --> --- # The response surface ```r persp(fit, FactorA~FactorB) ``` <!-- --> --- # The contour plot ```r contour(fit, FactorA ~ FactorB, image = FALSE) ``` <!-- --> --- # The importance of interaction Generally, when an interaction is large, the corresponding main effects have little practical meaning. For this experiment we would estimate the main effect of A to be 1 which is very small, and we are tempted to conclude that there is no effect due to A. However, when we examine the effects of A at different levels of factor B, we see that this is not the case. Factor A has an effect, but it depends on the level of factor B. --- # The importance of interaction That is, knowledge of the AB interaction is more useful than knowledge of the main effect. A significant interaction will often mask the significance of main effects. In the presence of significant interaction, the experimenter must usually examine the levels of one factor, say A, with levels of the other factors fixed to draw conclusions about the main effect of A. --- # The advantage of factorial design - More efficient than one-factor-at-a-time experiments. - Necessary when interactions may be present to avoid misleading conclusions. - Allow the effects of a factor to be estimated at several levels of the other factors, yielding conclusions that are valid over a range of experimental conditions.