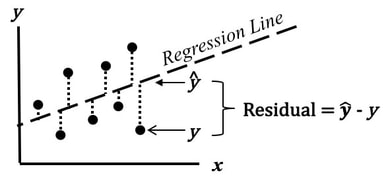

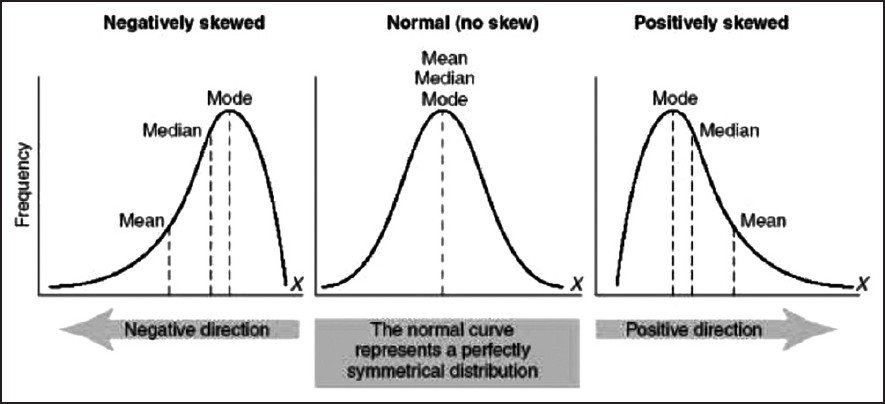

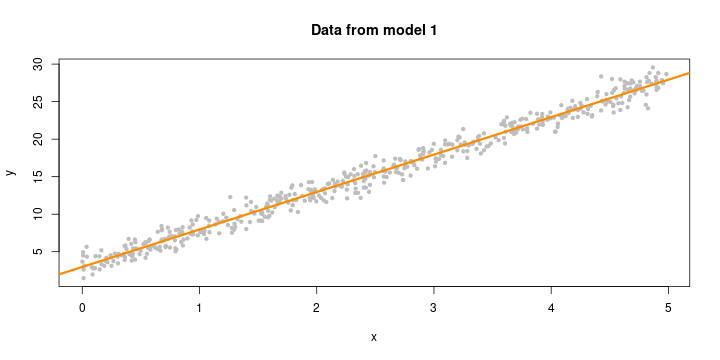

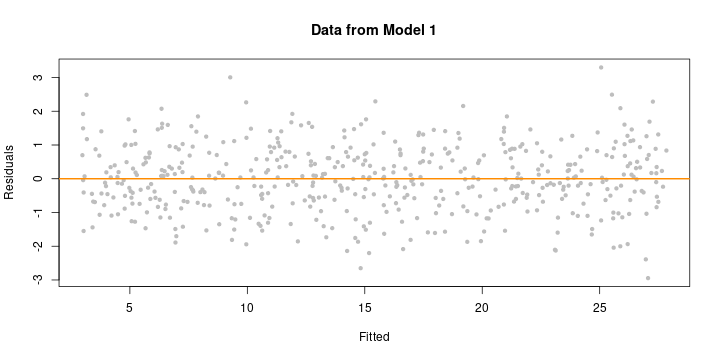

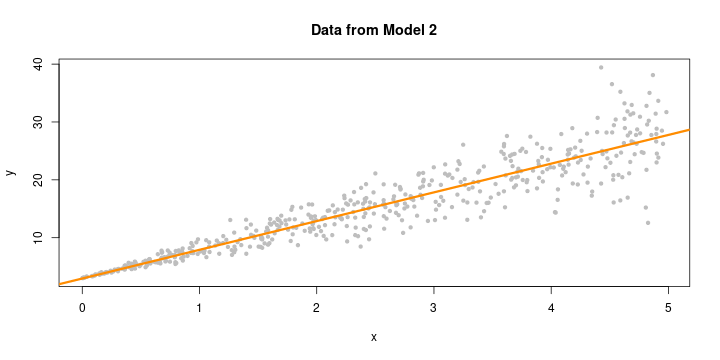

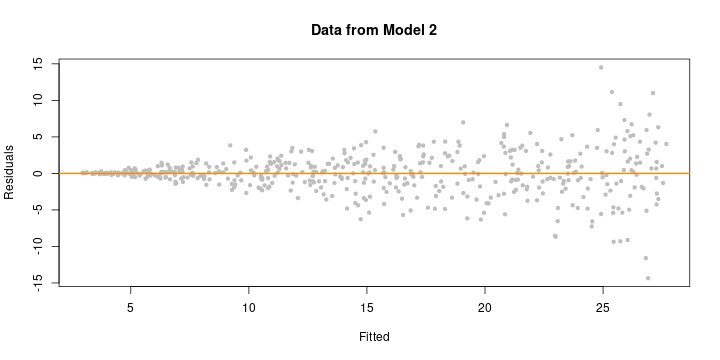

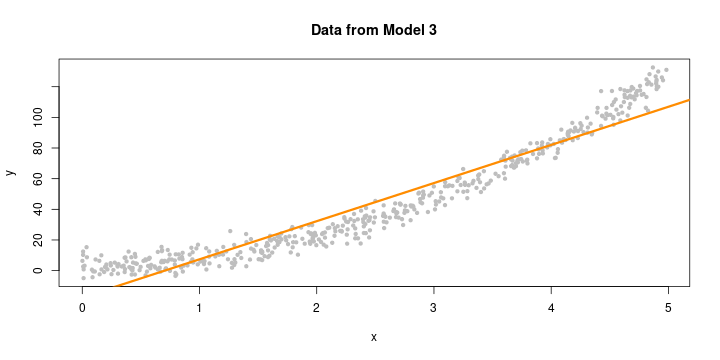

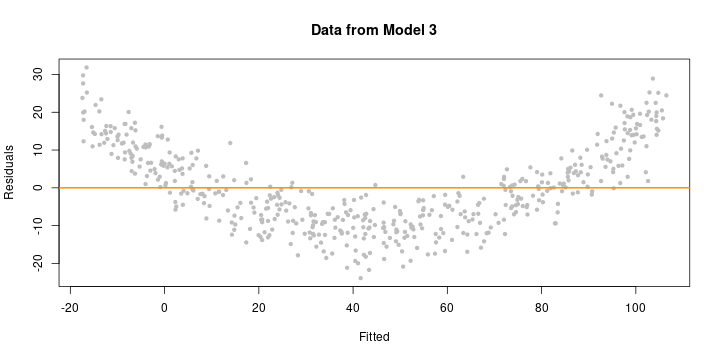

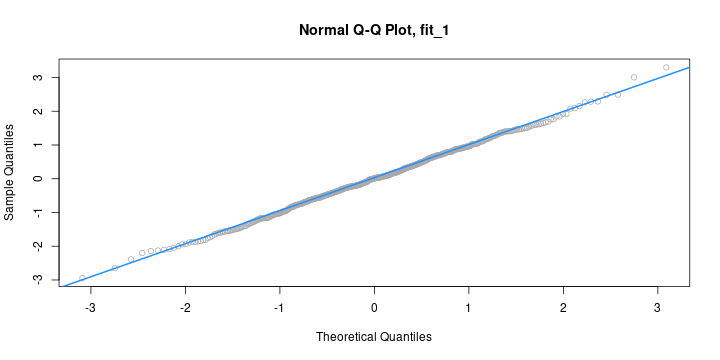

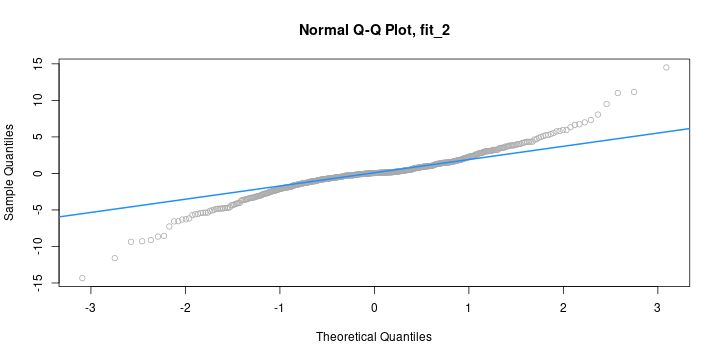

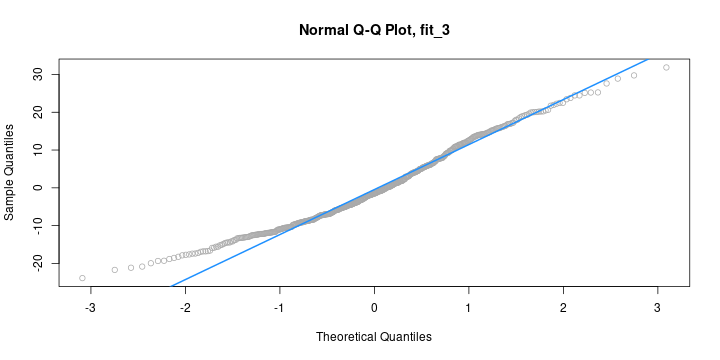

class: center, middle, inverse, title-slide .title[ # Design and Analysis of Experiments Using R ] .subtitle[ ## Checking model assumptions ] .author[ ### Olga Lyashevska ] .date[ ### 2022-10-14 ] --- # Model specification: separate means A simple statistical model can be written as: `$$\large y_{ij}=\mu_{i}+\epsilon_{ij}$$` Where `\(y_{ij}\)` is the response for the `\(j\)`th experimental unit to the `\(i\)`th level of the treatment factor; `\(\mu_i\)` is a different mean for each level of the treatment factor `\(i\)`; `\(\epsilon_{ij}\)` is an error. It is a full model. --- # Model specification: single mean Alternatives: `$$y_{ij}=\beta_{0} + \beta_{1}x_{i}+\epsilon_{ij}$$` `$$y_{ij}=\beta_{0} + \beta_{1}x_{i}+\beta_{2}x_{i}^2+\epsilon_{ij}$$` Single mean model is a special case of the separate means model, a reduced or restricted version. --- # Assumptions (model part) The full model assumes that every treatment factor has its own mean response `\(\mu_i\)`. Combined with the error structure, the separate means model implies that all the data are independent and normally distributed with constant variance: `$$y_{ij} ∼ N(\mu_{i}, \sigma^2 )$$` `\(∼N(\mu_{i},\sigma^2)\)` denotes "has a normal distribution with mean 0 and variance `\(\sigma^2\)`". Here: Each treatment group may have its own mean. --- # Assumptions (error part) We assume that errors `\(\epsilon^2\)` (deviations from the treatment means) are: - independent for different data values; - have mean zero; - have the same variance; - follow a Normal distribution. `$$\epsilon_{ij}\stackrel{iid}{\sim}N(0, \sigma^{2})$$` (iid independent and identically distributed) Normal distribution of errors needed for inference. --- # Assumptions checking The relationship between dependent and independent variables is linear. Observations: - Normal distribution - Zero mean - Constant variance Errors: - Normal distribution - Independence of the errors - Zero mean - Constant variance --- # Relative importance of assumptions - Independence of the errors in the most important and also most difficult to accommodate when fails (observations are close in time or space) - Constant variance (homoscedasticity) is intermediate, in that nonconstant variance (heteroscedasticity) can have a substantial effect on our inferences, but nonconstant variance can also be accommodated in many situations. - Normal distribution is the least important assumption, especially for large sample size. Some models depend more on normality than others. --- # Violation of assumptions Violation of assumptions can lead to bias in: - regression coefficients - standard errors - confidence intervals - significance tests --- # Errors and Residuals The quality of our inference depends on how well the errors `\(\epsilon_{ij}\)` conform to our assumptions, but that we do not observe the errors . The closest we can get to the errors are `\(r_{ij}\)`, the residuals from the full model. This unobservable nature of the errors can make diagnosis difficult in some situations. --- # Assessing violations Our assessments of assumptions about the errors are based on residuals. The raw residuals `\(r_{ij}\)` are simply the differences between the data `\(y_{ij}\)` and the treatment means `\(y_{i.}\)` .center[] Regardless the structure and complexity of the model the raw residuals are always the differences between the data and the fitted value. --- # Independence of errors Serial dependence or autocorrelation is one of the more common ways that independence can fail. Serial dependence arises when results close in time tend to be too similar (positive dependence) or too dissimilar (negative dependence). Serial dependence could result from a “drift” in the measuring instruments, a change in skill of the experimenter, changing environmental conditions, and so on. Plot the residuals on the vertical axis versus time sequence on the horizontal axis. --- # Example Serial autocorrelation .center[] Test: Durbin-Watson statistic to detect serial dependence --- # Heteroscedasticity <!-- Plot the residuals `\(r_{ij}\)` against the fitted values `$$y_{ij}−r_{ij}=y_{i.}$$` --> Plot the residuals against the fitted values. If the variance is constant, the vertical spread of the points will be about the same. Nonconstant variance is revealed as a pattern in the spread of the residuals. --- # Example .center[] --- # Asymmetry Non-normality, particularly asymmetry, can sometimes be lessened by transforming the response to a different scale. For example: - Skewness to the right is lessened by a square root, logarithm, or other transformation to a power less than one. - Skewness to the left is lessened by a square, cube, or other transformation to a power greater than one. --- # Example .center[] --- # Heuristic Square-root for moderate skew: - `sqrt(x)` for positively skewed data - `sqrt(max(x+1) - x)` for negatively skewed data log for greater skew: - `log10(x)` for positively skewed data - `log10(max(x+1) - x)` for negatively skewed data inverse for severe skew: - `1/x` for positively skewed data - `1/(max(x+1) - x)` for negatively skewed data --- # Transformations Transformations do not affect the `\(H_{0}: \mu_{1}=\mu_{2}=\mu_{i}\)` Transformations do affect means and therefore confidence intervals (Note confidence intervals computed on transformed scale do not backtransform into confidence intervals of means on the original scale). --- # Data simulation - Model 1 (constant variance): `$$Y= 3+5x+\epsilon, \epsilon\sim N(0, 1)$$` - Model 2 (non constant variance): `$$Y= 3+5x+\epsilon, \epsilon \sim N(0, x^{2})$$` - Model 3 (non linear): `$$Y= 3+5x^{2}+\epsilon, \epsilon \sim N(0, 25)$$` --- # Model 1 `$$Y= 3+5x+\epsilon, \epsilon \sim N(0, 1)$$` ```r sim_1 = function(sample_size = 500){ x = runif(n = sample_size) * 5 y = 3 + 5 * x + rnorm(n = sample_size, mean = 0, sd = 1) data.frame(x, y) } ``` --- # Simulate data ```r set.seed(42) sim_data_1 = sim_1() head(sim_data_1) ``` ``` # x y # 1 4.574030 24.773995 # 2 4.685377 26.475936 # 3 1.430698 8.954993 # 4 4.152238 23.951210 # 5 3.208728 20.341344 # 6 2.595480 14.943525 ``` --- # Fit model ```r plot(y ~ x, data = sim_data_1, col = "grey", pch = 20, main="Data from model 1") fit_1 = lm(y ~ x, data = sim_data_1) abline(fit_1, col = "darkorange", lwd = 3) ``` <!-- --> --- # Fitted versus Residuals Plot ```r plot(fitted(fit_1), resid(fit_1), col = "grey", pch = 20, xlab = "Fitted", ylab = "Residuals", main = "Data from Model 1") abline(h = 0, col = "darkorange", lwd = 2) ``` <!-- --> --- # Interpertation We should look for two things in this plot. - At any fitted value, the mean of the residuals should be roughly 0. If this is the case, the linearity assumption is valid. For this reason, we generally add a horizontal line at `\(y=0\)` to emphasize this point. - At every fitted value, the spread of the residuals should be roughly the same. If this is the case, the constant variance assumption is valid. --- # Model 2: `$$Y= 3+5x+\epsilon, \epsilon~N(0, x^{2})$$` ```r sim_2 = function(sample_size = 500) { x = runif(n = sample_size) * 5 y = 3 + 5 * x + rnorm(n = sample_size, mean = 0, sd = x) data.frame(x, y) } ``` --- # Simulate data Model 2 an example of non-constant variance. In this case, the variance is larger for larger values of the predictor variable `\(x\)` ```r set.seed(42) sim_data_2 = sim_2() fit_2 = lm(y ~ x, data = sim_data_2) ``` --- ```r plot(y ~ x, data = sim_data_2, col = "grey", pch = 20, main = "Data from Model 2") abline(fit_2, col = "darkorange", lwd = 3) ``` <!-- --> --- # Fitted versus Residual plot ```r plot(fitted(fit_2), resid(fit_2), col = "grey", pch = 20, xlab = "Fitted", ylab = "Residuals", main = "Data from Model 2") abline(h = 0, col = "darkorange", lwd = 2) ``` <!-- --> --- # Interpretation On the fitted versus residuals plot, we see two things very clearly. - For any fitted value, the residuals seem roughly centered at 0. This is good! The linearity assumption is not violated. - However, we also see very clearly, that for larger fitted values, the spread of the residuals is larger. This is bad! The constant variance assumption is violated here. --- # Model 3: `$$Y= 3+5x+\epsilon, \epsilon~N(0, 25)$$` ```r sim_3 = function(sample_size = 500) { x = runif(n = sample_size) * 5 y = 3 + 5 * x ^ 2 + rnorm(n = sample_size, mean = 0, sd = 5) data.frame(x, y) } ``` --- # Simulate data Model 3 is an example of a model where `\(Y\)` is not a linear combination of the predictors. In this case the predictor is `\(x\)`, but the model uses `\(x^{2}\)`. ```r set.seed(42) sim_data_3 = sim_3() fit_3 = lm(y ~ x, data = sim_data_3) ``` --- ```r plot(y ~ x, data = sim_data_3, col = "grey", pch = 20, main = "Data from Model 3") abline(fit_3, col = "darkorange", lwd = 3) ``` <!-- --> --- # Fitted versus residual plot ```r plot(fitted(fit_3), resid(fit_3), col = "grey", pch = 20, xlab = "Fitted", ylab = "Residuals", main = "Data from Model 3") abline(h = 0, col = "darkorange", lwd = 2) ``` <!-- --> --- # Interpretation This time on the fitted versus residuals plot, for any fitted value, the spread of the residuals is about the same. However, they are not even close to centered at zero! At small and large fitted values the model is underestimating, while at medium fitted values, the model is overestimating. These are systematic errors, not random noise. So the constant variance assumption is met, but the linearity assumption is violated. The form of our model is simply wrong. We’re trying to fit a line to a curve! --- # Test for heteroscedasticity *Breusch-Pagan Test* - `\(H_{0}:\)` Homoscedasticity. The errors have constant variance about the true model. - `\(H_{1}:\)` Heteroscedasticity. The errors have non-constant variance about the true model. This test will specifically test the constant variance assumption. Classical tests of constant variance are sensitive to nonnormality! --- ```r #install.packages("lmtest") library(lmtest) ``` Let’s try it on the three models we fit above. Recall, - `fit_1` had no violation of assumptions, - `fit_2` violated the constant variance assumption, but not linearity, - `fit_3` violated linearity, but not constant variance. --- ```r bptest(fit_1) ``` ``` ## ## studentized Breusch-Pagan test ## ## data: fit_1 ## BP = 1.0234, df = 1, p-value = 0.3117 ``` For `fit_1` we see a large p-value, so we do not reject the null of homoscedasticity, which is what we would expect. --- ```r bptest(fit_2) ``` ``` ## ## studentized Breusch-Pagan test ## ## data: fit_2 ## BP = 76.693, df = 1, p-value < 2.2e-16 ``` For `fit_2` we see a small p-value, so we reject the null of homoscedasticity. The constant variance assumption is violated. This matches our findings with a fitted versus residuals plot. --- ```r bptest(fit_3) ``` ``` ## ## studentized Breusch-Pagan test ## ## data: fit_3 ## BP = 0.33466, df = 1, p-value = 0.5629 ``` Lastly, for `fit_3` we again see a large p-value, so we do not reject the null of homoscedasticity, which matches our findings with a fitted versus residuals plot. --- # Histograms <!-- --> - For `fit_1` histogram appears very normal. - For `fit_3`, appears to be very non-normal. - However `fit_2` is not as clear. It does have a rough bell shape, however, it also has a very sharp peak. For this reason we will usually use more powerful tools such as Q-Q plots and the Shapiro-Wilk test for assessing the normality of errors. --- # Q-Q plot Q-Q plot for the residuals of `fit_1` to check if the errors could truly be normally distributed. ```r qqnorm(resid(fit_1), main = "Normal Q-Q Plot, fit_1", col = "darkgrey") qqline(resid(fit_1), col = "dodgerblue", lwd = 2) ``` <!-- --> --- For `fit_2`, we have a suspect Q-Q plot. We would probably not believe the errors follow a normal distribution. ```r qqnorm(resid(fit_2), main = "Normal Q-Q Plot, fit_2", col = "darkgrey") qqline(resid(fit_2), col = "dodgerblue", lwd = 2) ``` <!-- --> --- For `fit_3`, again we have a suspect Q-Q plot. We would probably not believe the errors follow a normal distribution. ```r qqnorm(resid(fit_3), main = "Normal Q-Q Plot, fit_3", col = "darkgrey") qqline(resid(fit_3), col = "dodgerblue", lwd = 2) ``` <!-- --> --- # Test for normality *Shapiro-Wilk Test* The null hypothesis assumes the data were sampled from a normal distribution, thus a small p-value indicates we believe there is only a small probability the data could have been sampled from a normal distribution. - `\(H_{0}:\)` data is normally distributed - `\(H_{1}:\)` data is not normally distributed --- ```r shapiro.test(resid(fit_1)) ``` ``` ## ## Shapiro-Wilk normality test ## ## data: resid(fit_1) ## W = 0.99858, p-value = 0.9622 ``` We see a large p-value (>0.05), so we accept the null of normality, and conclude that data is normal. --- ```r shapiro.test(resid(fit_2)) ``` ``` ## ## Shapiro-Wilk normality test ## ## data: resid(fit_2) ## W = 0.93697, p-value = 1.056e-13 ``` We see a small p-value (<0.05), so we reject the null of normality, and conclude that data is not normal. --- ```r shapiro.test(resid(fit_3)) ``` ``` ## ## Shapiro-Wilk normality test ## ## data: resid(fit_3) ## W = 0.97643, p-value = 3.231e-07 ``` We see a small p-value (<0.05), so we reject the null of normality, and conclude that data is not normal.