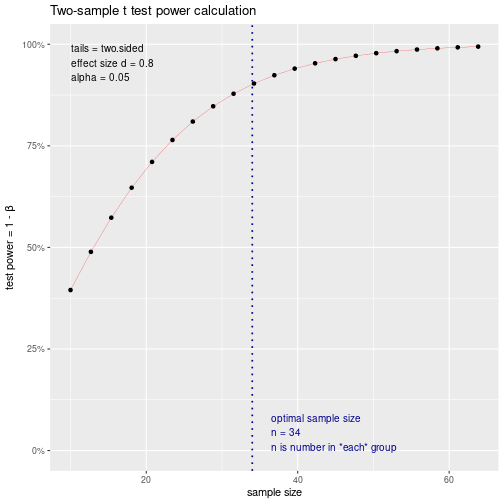

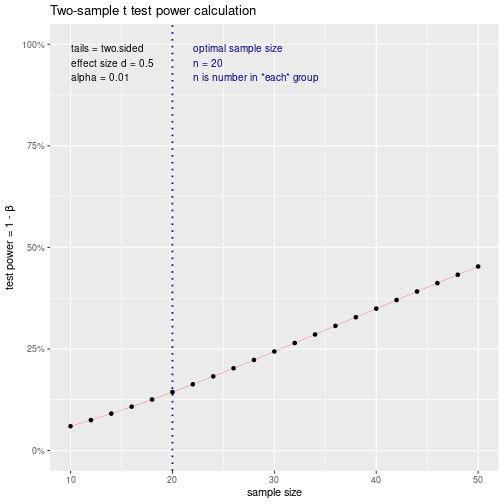

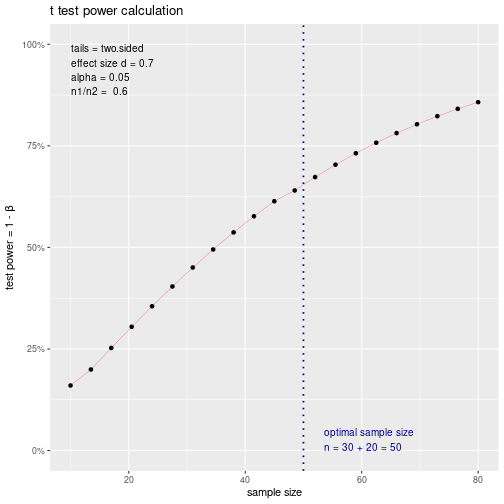

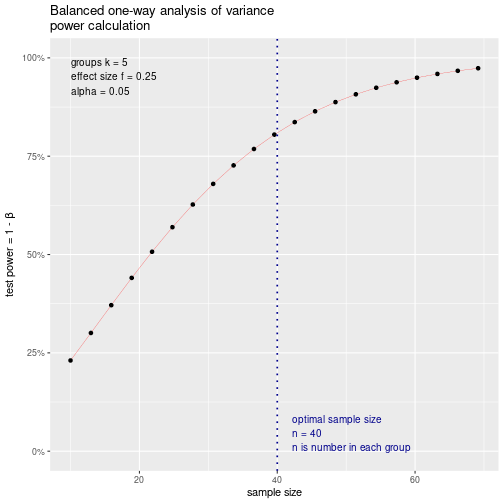

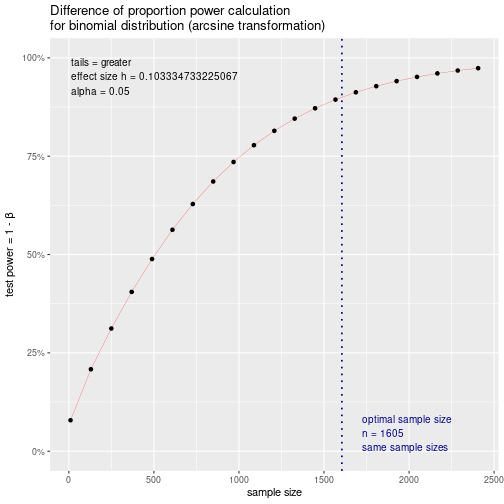

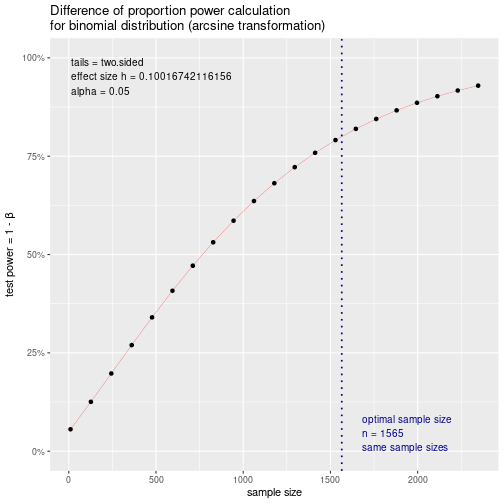

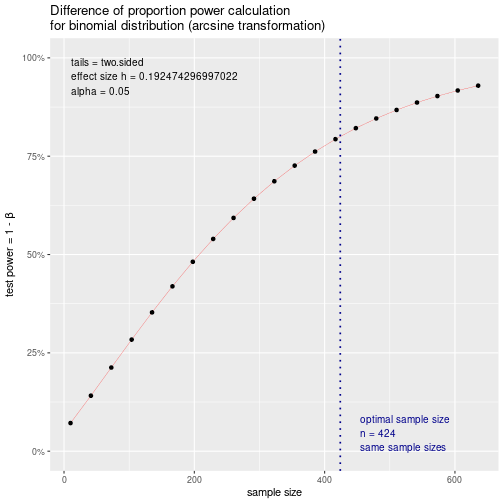

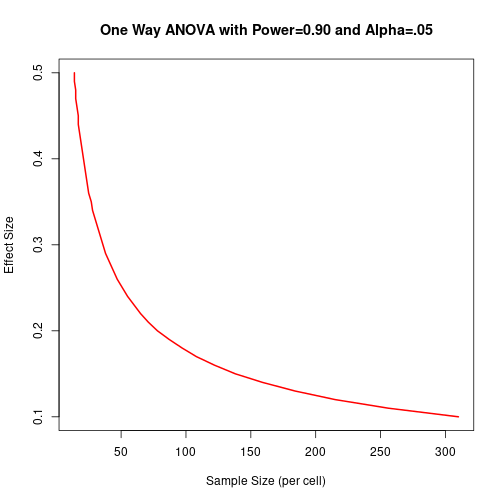

class: center, middle, inverse, title-slide .title[ # Design and Analysis of Experiments Using R ] .subtitle[ ## Power Analysis and Sample Size ] .author[ ### Olga Lyashevska ] .date[ ### 2022-12-07 ] --- # Power analysis and sample size In any experimental design, a critical decision is the choice of sample size (the number of replicates to run). -- Power analysis allows to determine: - the **sample size** required to detect an effect of a given size with a given degree of confidence. -- - the **probability of detecting an effect of a given size** with a given level of confidence, under sample size constraints. --- # Typical questions: - How many subjects do I need for my study? -- - There are x number of people available for this study. Is the study worth doing? -- Questions like these can be answered through power analysis, an important set of techniques in experimental design. --- # Important concepts - sample size (the number of replicates) -- - effect size (a way of quantifying the size of the difference between two groups, the exact formula depends on the methodology employed) -- - Probability of Type 1 error (significance) -- - 1 - Probability of Type 2 error (power) -- The power of a statistical test is the probability that it will yield statistically significant results. [Read this paper: Ind Psychiatry J. 2009 Jul-Dec; 18(2): 127–131.](https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3444174/) --- # Important concepts <img src="figs/Screenshot_20210217_123601.png" style="width: 80%" /> Given any three, you can calculate the fourth. --- # Important concepts Although the sample size and significance level are under the direct control of the researcher, power and effect size are affected more indirectly. -- For example, as you relax the significance level (in other words, make it easier to reject the null hypothesis), power increases. -- Similarly, increasing the sample size increases power. --- # Type I and Type II error (Recap) <img src="figs/Screenshot_20210120_112118.png" style="width: 80%" /> [Read this paper: J Grad Med Educ. 2012 Sep; 4(3): 279–282: 127–131.](https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2996198/?report=classic) --- # Research goal Your research goal is typically to maximize the power of your statistical tests while maintaining an acceptable significance level and employing as small a sample size as possible. -- That is, - you want to maximize the chances of finding a real effect and - minimize the chances of finding an effect that isn’t really there - while keeping study costs within reason --- # Hypothesis testing (Recap) - Specify a hypothesis about a population parameter - `\(H_{0}\)`. -- - Draw a sample from this population and calculate a statistic that’s used to make inferences about the population parameter -- - Assuming that the null hypothesis is true, calculate the probability of obtaining the observed sample statistic. -- - If the probability is sufficiently small, reject the null hypothesis in favor of its opposite, referred to as the alternative - `\(H_{1}\)`. --- # Example Say you’re interested in evaluating the impact of cell phone use on driver reaction time. Your null hypothesis is `\(H_{0}: \mu_{1} - \mu_{2} = 0\)` -- where `\(\mu_{1}\)` is the mean response time for drivers using a cell phone and `\(\mu_{2}\)` is the mean response time for drivers that are cell phone free. -- Here, `\(\mu_{1}\)` and `\(\mu_{2}\)` the population parameter of interest. -- If you reject this null hypothesis, you’re left with the alternate, namely `\(H_{1}\)`. This is equivalent to `\(\mu_{1} \neq \mu_{2}\)` , that the mean reaction times for the two conditions are not equal. --- # Example (cont) A sample of individuals is selected and randomly assigned to one of two conditions. -- In the first condition, participants react to a series of driving challenges in a simulator while talking on a cell phone. In the second condition, participants complete the same series of challenges but without a cell phone. -- Overall reaction time is assessed for each individual. --- # Example (cont) Based on the sample data, you can calculate the statistic `\((X_{1} − X_{2})/(s/n)\)` where `\(X_{1}\)` and `\(X_2\)` are the sample reaction time means in the two conditions, `\(s\)` is the pooled sample standard deviation, and `\(n\)` is the number of participants in each condition. -- If the null hypothesis is true and you can assume that reaction times are normally distributed, this sample statistic will follow a `\(t\)` distribution with `\(2n – 2\)` degrees of freedom. --- # Example (cont) Using this fact, you can calculate the probability of obtaining a sample statistic this large or larger. -- If the probability `\(p\)` is smaller than some predetermined cutoff (say `\(p < 0.05\)`), you reject the null hypothesis in favor of the alternate hypothesis. -- This predetermined cutoff (0.05) is the significance level of the test. --- # Example (cont) There are 4 possible outcomes: - If the null hypothesis is false and the statistical test leads you to reject it, you’ve made a correct decision. You’ve correctly determined that reaction time is affected by cell phone use. -- - If the null hypothesis is true and you don’t reject it, again you’ve made a correct decision. Reaction time isn’t affected by cell phone use. --- # Example (cont) - If the null hypothesis is true but you reject it, you’ve committed a Type I error. You’ve concluded that cell phone use affects reaction time when it doesn’t. -- - If the null hypothesis is false and you fail to reject it, you’ve committed a Type II error. Cell phone use affects reaction time, but you’ve failed to discern this. --- # Situation 1 Let’s assume that we know from past experience that reaction time has a standard deviation of 1.25 seconds. Also suppose that a 1-second difference in reaction time is considered an important difference. -- We would like to conduct a study in which we’re able to detect an effect size of d = 1/1.25 = 0.8 or larger. Additionally, we want to be 90% sure to detect such a difference if it exists, and 95% sure that we won’t declare a difference to be significant when it’s actually due to random variability. -- How many participants will you need in your study? --- # Equal sample sizes <img src="figs/Screenshot_20210217_132221.png" style="width: 100%" /> --- # Effect size <img src="figs/Screenshot_20210217_144830.png" style="width: 100%" /> --- .font90[ ```r library(pwr) # install.packages("pwr") pwr.t.test(d = 0.8, sig.level = .05, power = .9, type = "two.sample", alternative = "two.sided") ``` ``` ## ## Two-sample t test power calculation ## ## n = 33.82555 ## d = 0.8 ## sig.level = 0.05 ## power = 0.9 ## alternative = two.sided ## ## NOTE: n is number in *each* group ``` ] --- # Conclusions The results suggest that - we need 34 participants in each group (for a total of 68 participants) in order to detect an effect size of 0.8 with 90% certainty - and no more than a 5% chance of erroneously concluding that a difference exists when, in fact, it doesn’t. --- .font90[ <!-- --> ] --- # Situation 2 Assume that in comparing the two conditions we want to be able to detect a 0.5 standard deviation difference in population means. -- We want to limit the chances of falsely declaring the population means to be different to 1 out of 100. Additionally, we can only afford to include 40 participants in the study. -- What’s the probability that we’ll be able to detect a difference between the population means that’s this large, given the constraints outlined? --- .font90[ ```r pwr.t.test(n = 20, d = 0.5, sig.level = 0.01, type = "two.sample", alternative = "two.sided") ``` ``` ## ## Two-sample t test power calculation ## ## n = 20 ## d = 0.5 ## sig.level = 0.01 ## power = 0.1439551 ## alternative = two.sided ## ## NOTE: n is number in *each* group ``` ] --- # Conclusion With 20 participants in each group, an a priori significance level of 0.01, and a dependent variable standard deviation of 1.25 seconds, we have a 14% chance of declaring a difference of 0.625 (1.25*0.5=0.625) seconds or less significant. -- Conversely, there’s an 86% chance that we’ll miss the effect that we’re looking for. We may want to seriously rethink putting the time and effort into the experiment as it stands. --- .font90[ <!-- --> ] --- # Not equal sample sizes <img src="figs/Screenshot_20210217_140249.png" style="width: 100%" /> --- # Situation 3 Assume that in comparing the two conditions we want to be able to detect a 0.7 standard deviation difference in population means. -- We want to limit the chances of falsely declaring the population means to be different to 5 out of 100. Additionally, we can only afford to include 50 participants in the study (30 in group 1 and 20 in group 2). -- What’s the probability that we’ll be able to detect a difference between the population means that’s this large, given the constraints outlined? --- # Do it yourself - 10 min And write conclusions. --- .font90[ ```r pwr.t2n.test(n1 = 30, n2 = 20, d = 0.7, sig.level = 0.05, alternative = "two.sided") ``` ``` ## ## t test power calculation ## ## n1 = 30 ## n2 = 20 ## d = 0.7 ## sig.level = 0.05 ## power = 0.6613646 ## alternative = two.sided ``` ] --- # Conclusions With 30 and 20 participants in group 1 and 2 respectively, and a priori significance level of 0.05, and a dependent variable standard deviation of 1.25 seconds, we have a 66% chance of declaring a difference of 0.87 seconds or less significant (1.25*0.7 = 0.87). -- Conversely, there’s a 34% chance that we’ll miss the effect that you’re looking for. -- We still may want to rethink putting the time and effort into the experiment as it stands. --- .font90[ <!-- --> ] --- # ANOVA <img src="figs/Screenshot_20210217_143059.png" style="width: 100%" /> --- # Effect size <img src="figs/Screenshot_20210217_144712.png" style="width: 100%" /> --- # Do it yourself - 10 min For a one-way ANOVA comparing five groups, calculate the sample size needed in each group to obtain a power of 0.80, when the effect size is 0.25 and a significance level of 0.05 --- .font90[ ```r pwr.anova.test(k=5, f = 0.25, sig.level = 0.05, power = 0.8) ``` ``` ## ## Balanced one-way analysis of variance power calculation ## ## k = 5 ## n = 39.1534 ## f = 0.25 ## sig.level = 0.05 ## power = 0.8 ## ## NOTE: n is number in each group ``` ] --- # Conclusions The total sample size is therefore 5 x 39 = 195. Here we have to estimate what the means of the five groups will be, along with the common variance. When we have no idea what to expect (because data yet to be collected), various approaches can be applied. --- <!-- --> --- # Test of proportions <img src="figs/Screenshot_20210217_150917.png" style="width: 100%" /> --- # Effect size <img src="figs/Screenshot_20210217_151703.png" style="width: 100%" /> --- # Example We suspect that a popular medication relieves symptoms in 60% of users. A new (and more expensive) medication will be marketed if it improves symptoms in 65% of users. -- How many participants will we need to include in a study comparing these two medications if you want to detect a difference this large? -- Assume that we want to be 90% confident in a conclusion that the new drug is better and 95% confident that we won’t reach this conclusion erroneously. We’ll use a one-tailed test because we’re only interested in assessing whether the new drug is better than the standard. --- .font90[ ```r pwr.2p.test(h=ES.h(0.65, 0.60), sig.level=.05, power=.9, alternative="greater") ``` ``` ## ## Difference of proportion power calculation for binomial distribution (arcsine transformation) ## ## h = 0.1033347 ## n = 1604.007 ## sig.level = 0.05 ## power = 0.9 ## alternative = greater ## ## NOTE: same sample sizes ``` ] --- # Conclusions Based on these results, we’ll need to conduct a study with 1,605 individuals receiving the new drug and 1,605 receiving the existing drug in order to meet the criteria. --- <!-- --> --- # Do it yourself - 10 min We want to randomly sample male and female college students and ask them if they consume alcohol at least once a week. Our null hypothesis is no difference in the proportion that answer yes. Our alternative hypothesis is that there is a difference. This is a two-sided alternative; one gender has higher proportion but we don't know which. We would like to detect a difference as small as 5%. How many students do we need to sample in each group if we want 80% power and a significance level of 0.05? We think one group proportion is 50% and the other 55%. --- ```r (pwr.2p.test(h=ES.h(0.55, 0.50), sig.level=0.05, power=0.8, alternative="two.sided")) ``` ``` ## ## Difference of proportion power calculation for binomial distribution (arcsine transformation) ## ## h = 0.1001674 ## n = 1564.529 ## sig.level = 0.05 ## power = 0.8 ## alternative = two.sided ## ## NOTE: same sample sizes ``` --- # Conclusions We need to sample 1,565 males and 1,565 females to detect the 5% difference with 80% power. --- <!-- --> --- # Do it yourself - 5 min Lets change proportions to 5% and 10%. We are still wanting to detect a difference as small as 5%. How many student do we need? --- ```r pwr.2p.test(h=ES.h(0.10, 0.05), sig.level=0.05, power=0.8, alternative="two.sided") ``` ``` ## ## Difference of proportion power calculation for binomial distribution (arcsine transformation) ## ## h = 0.1924743 ## n = 423.7319 ## sig.level = 0.05 ## power = 0.8 ## alternative = two.sided ## ## NOTE: same sample sizes ``` --- # Conclusions Even though the absolute difference between proportions is the same (5%), the optimum sample size is now 424 per group. 10% vs 5% is actually a bigger difference than 55% vs 50%. A heuristic approach for understanding why is to compare the ratios: 55/50 = 1.1 while 10/5 = 2. --- <!-- --> --- # Effect size in novel situations In power analysis, the expected effect size is the most difficult parameter to determine. -- It typically requires that you have experience with the subject matter and the measures employed. -- For example, the data from past studies can be used to calculate effect sizes, which can then be used to plan future studies. --- # Use benchmark When you have no idea what effect size might be present use this table, but these are just general suggestions and may not suit all fields. <img src="figs/Screenshot_20210217_155828.png" style="width: 100%" /> --- # Finally An alternative is to vary the study parameters and note the impact on sample size and power. For example, assume that we want to compare five groups using a one-way ANOVA, a 0.05 significance level and 0.9 power. --- ```r es <- seq(.1, .5, .01) nes <- length(es) samsize <- NULL for (i in 1:nes){ result <- pwr.anova.test(k=5, f=es[i], sig.level=.05, power=.9) samsize[i] <- ceiling(result$n) } plot(samsize,es, type="l", lwd=2, col="red", ylab="Effect Size", xlab="Sample Size (per cell)", main="One Way ANOVA with Power=.90 and Alpha=.05") ``` --- <!-- --> --- # Summary Power analysis is typically an interactive process. The investigator varies the parameters of sample size, effect size, desired significance level, and desired power to observe their impact on each other. -- Information from past research (particularly regarding effect sizes) can be used to design more effective and efficient future research. -- An important side benefit of power analysis is the shift, away from a singular focus on binary hypothesis testing (that is, does an effect exist or not), toward an appreciation of the size of the effect under consideration.