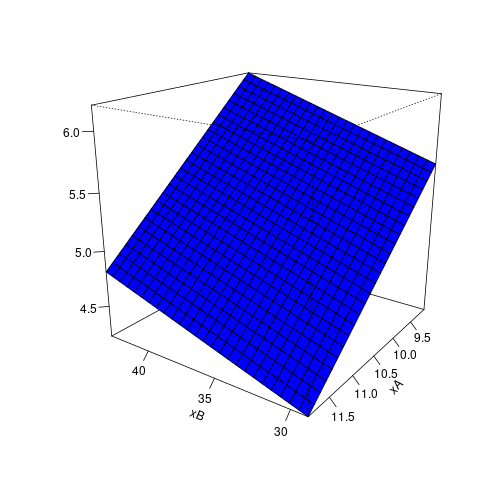

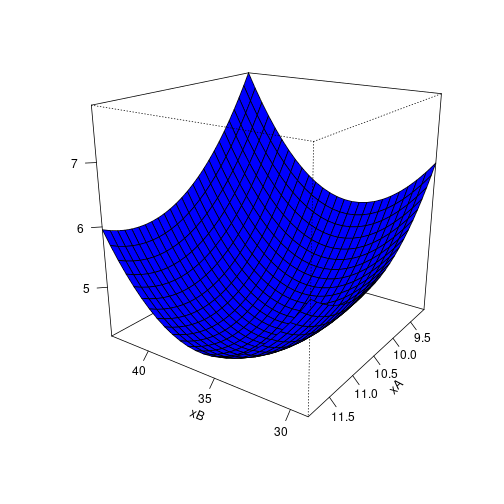

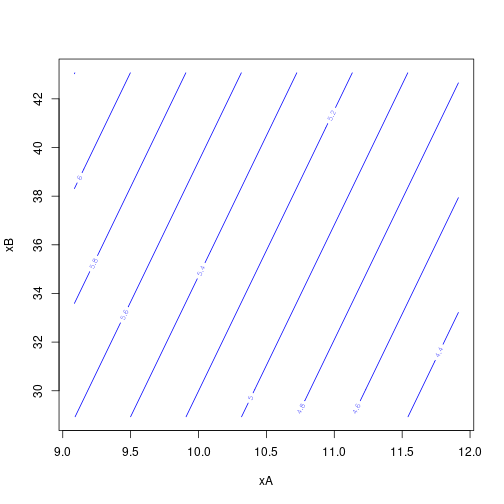

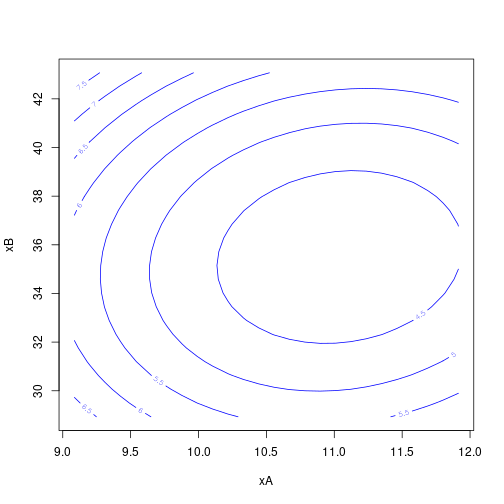

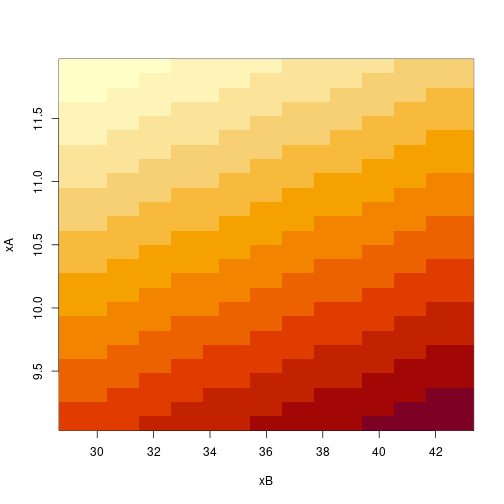

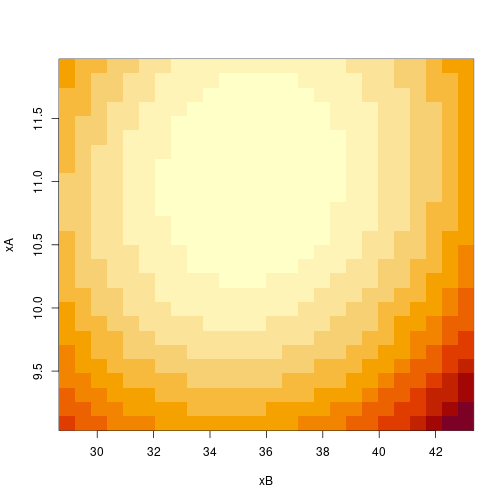

class: center, middle, inverse, title-slide .title[ # Design and Analysis of Experiments Using R ] .subtitle[ ## Response Surface Design ] .author[ ### Olga Lyashevska ] .date[ ### 2022-12-01 ] --- # Introduction Factorial designs are used to describe how the response varies as a function of the treatments and determine treatments that give optimal responses, e.g. maxima or minima. However when treatment factors vary across a *continuous range* of values, other treatment designs such as response surface designs may be more efficient. --- # Example Suppose we bake a cake a certain time `\(x_1\)` at a certain temperature `\(x_2\)` ; time and temperature can vary *continuously*. We could, in principle, bake cakes for any time and temperature combination. Assuming that all the cake batters are the same, the quality of the cakes `\(y\)` will depend on the time and temperature of baking. --- # Model Assuming the relationship between the response `\(y\)` and the factor settings `\(x\)` to be a *nonlinear* equation is given by: `$$y = f(x)+\epsilon$$` -- For 2 factor we express this as: `$$y = f(x_1, x_2) + \epsilon$$` where `\(f\)` is a nonlinear function and `\(\epsilon\)` is the random effect of experimental error. --- # Goal - to determine the factor settings that produce a maximum or minimum response (optimisation); - to map the *nonlinear* relationship between the response and the factor settings over the *continuous* factor space; --- # When used - with small number of factors (~3); - at the last stage of experimentation when the important factors have already been determined in earlier experiments, and the purpose is to describe in detail the relationship between the factors and the response; -- It is usually known or assumed that a simple linear model, even with interactions, is not good enough to represent that relationship. --- # Application Response surface methods have found considerable use in industry especially in chemical processes where the reaction yield or cost of production can be optimized as a function of the settings of controllable process factors. -- These designs have also found successful applications in food science, engineering, biology, psychology, textiles, education, and many other areas. --- # Hypothetical response surface <img src="figs/Screenshot_20210210_101347.png" style="width: 90%" /> Fairly simple --- # Hypothetical response surface <img src="figs/Screenshot_20210210_114414.png" style="width: 90%" /> More complicated --- # Hypothetical contour plot <img src="figs/Screenshot_20210210_114823.png" style="width: 90%" /> --- # Function Form `All models are wrong; some models are useful. George Box` We often don’t know anything about the shape or form of the function `\(f\)` , so any mathematical model that we assume for `\(f\)` is surely wrong. -- But polynomials are often adequate models, at least in a "small" region. We will consider *first-order models* and *second-order models* for response. --- # First-order form `$$y_{ij} = \beta_{0} + \beta_{1}x_{1i} + \beta_{2}x_{2i} + \ldots + \beta_{q}x{qi} + \epsilon_{ij}$$` `$$=\beta_{0} + \sum_{k=1}^{q}\beta_{k}x_{ki} + \epsilon_{ij}$$` `$$=\beta_{0}+\mathbf{x_{i}^{\prime}\beta} + \epsilon_{ij}$$` where `\(x_i = (x_{1i} , x_{2i} ,\ldots, x_{qi})^{\prime}\)` and `\(\beta=(\beta_1 ,\beta_2 ,\ldots, \beta_q)^{\prime}\)` This is an ordinary multiple regression model, with design variables as predictors and `\(\beta_k\)`'s as regression coefficients. --- # First-order form Suitable for local approximations. Describe inclined planes: flat surfaces, possibly tilted. Appropriate for describing portions of a response surface that are separated from maxima, minima, ridge lines, and other strongly curved regions. --- # Think What parts could be described by the first order? <img src="figs/Screenshot_20210210_114414.png" style="width: 90%" /> --- # Steepest ascent and descent First-order models tell us which way is up or down, they show direction of steepest ascent or descent. -- The method of steepest ascent is a procedure for moving sequentially in the direction of the maximum increase in the response. -- If minimization is desired, then we call this technique the method of steepest descent. -- Step is proportional to `\(\beta\)`. The direction is only approximately correct. --- # Path of steepest ascent <img src="figs/Screenshot_20210210_130221.png" style="width: 80%" /> --- # First-order designs 3 basic needs: - estimate parameters of the model - estimate random error and lack of fit - estimate efficiency of the design (variance of estimation and resources) --- # Random error vs lack of fit - Data might not fit a model because of random error `\(\epsilon\)`; this is pure error. - Data also might not fit a model because the model is misspecified and does not truly describe the mean structure; this is lack of fit. -- Our models are approximations, so we need to know when the lack of fit becomes large relative to pure error. -- This is particularly true for first-order models. -- It is also true for second-order models, though we are more likely to reduce our region of modeling rather than move to higher orders. --- # Example 1 A chemical engineer is interested in determining the operating conditions that maximize the yield of a process. -- Two controllable variables influence process yield: reaction time and reaction temperature. -- The engineer is currently operating the process with a reaction time of 35 minutes and a temperature of 155F, which result in yields of around 40 percent. -- Because it is unlikely that this region contains the optimum, a first-order model is fitted. --- # Example 1 (cont.) The engineer decides that the region of exploration for fitting the first-order model should be (30, 40) minutes of reaction time and (150, 160) of temperature. -- To simplify the calculations, the independent variables will be coded to (−1, 1) interval. -- The (coded) low and high values for each variable are ±1; the *center points* are m observations taken with all variables at 0. --- # Example 1 (cont.) <img src="figs/Screenshot_20210210_130951.png" style="width: 80%" /> Why do we replicate center points? --- # Example 1 (cont.) - the variation among the responses at the center point provides an estimate of pure error. -- - the contrast between the mean of the center points and the mean of the factorial points provides a test for lack of fit. -- - when the data follow a first-order model, this contrast has expected value zero; -- - when the data follow a second-order model, this contrast has an expectation that depends on the pure quadratic terms. --- # R code ```r example1.data = data.frame(list( x1 = c(-1, -1, 1, 1, 0, 0, 0, 0, 0), x2 = c(-1, 1, -1, 1, 0, 0, 0, 0, 0), response = c(39.3, 40, 40.9, 41.5, 40.3, 40.5, 40.7, 40.2, 40.6))) head(example1.data) ``` ``` ## x1 x2 response ## 1 -1 -1 39.3 ## 2 -1 1 40.0 ## 3 1 -1 40.9 ## 4 1 1 41.5 ## 5 0 0 40.3 ## 6 0 0 40.5 ``` --- # Least squares model .font70[ ```r summary(lm(response~x1+x2, data=example1.data)) ``` ``` ## ## Call: ## lm(formula = response ~ x1 + x2, data = example1.data) ## ## Residuals: ## Min 1Q Median 3Q Max ## -0.244444 -0.044444 0.005556 0.055556 0.255556 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 40.44444 0.05729 705.987 5.45e-16 *** ## x1 0.77500 0.08593 9.019 0.000104 *** ## x2 0.32500 0.08593 3.782 0.009158 ** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 0.1719 on 6 degrees of freedom ## Multiple R-squared: 0.941, Adjusted R-squared: 0.9213 ## F-statistic: 47.82 on 2 and 6 DF, p-value: 0.0002057 ``` ] --- # Least squares model `\(\hat{y} = 40.44+0.775x_{1}+0.325x_{2}\)` -- The first-order model assumes that the variables `\(x_1\)` and `\(x_2\)` have an additive effect on the response. Interaction between the variables would be represented a cross-product term `\(x_{1}x_{2}\)` added to the model. -- 1. Obtain an estimate of error. 2. Check for interactions (cross-product terms) in the model. 3. Check for quadratic effects (curvature). --- # Lets expand model ```r anova(lm(response~x1*x2+I(x1^2)+I(x2^2), data=example1.data)) ``` ``` ## Analysis of Variance Table ## ## Response: response ## Df Sum Sq Mean Sq F value Pr(>F) ## x1 1 2.40250 2.40250 55.8721 0.001713 ** ## x2 1 0.42250 0.42250 9.8256 0.035030 * ## I(x1^2) 1 0.00272 0.00272 0.0633 0.813741 ## x1:x2 1 0.00250 0.00250 0.0581 0.821316 ## Residuals 4 0.17200 0.04300 ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` - estimate of error: 0.043 --- # Lets expand model .font60[ ```r summary(lm(response~x1*x2+I(x1^2)+I(x2^2), data=example1.data)) ``` ``` ## ## Call: ## lm(formula = response ~ x1 * x2 + I(x1^2) + I(x2^2), data = example1.data) ## ## Residuals: ## 1 2 3 4 5 6 7 ## 3.469e-17 -1.214e-17 -9.620e-18 2.739e-17 -1.600e-01 4.000e-02 2.400e-01 ## 8 9 ## -2.600e-01 1.400e-01 ## ## Coefficients: (1 not defined because of singularities) ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 40.46000 0.09274 436.291 1.66e-10 *** ## x1 0.77500 0.10368 7.475 0.00171 ** ## x2 0.32500 0.10368 3.135 0.03503 * ## I(x1^2) -0.03500 0.13910 -0.252 0.81374 ## I(x2^2) NA NA NA NA ## x1:x2 -0.02500 0.10368 -0.241 0.82132 ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 0.2074 on 4 degrees of freedom ## Multiple R-squared: 0.9427, Adjusted R-squared: 0.8854 ## F-statistic: 16.45 on 4 and 4 DF, p-value: 0.009471 ``` ] --- # Checking adequacy of the first-order model - the interaction and curvature checks are not significant, whereas the F-test for the overall regression is significant. - coefficients `\(β_{1}\)` and `\(\beta_{2}\)` are large relative to their standard errors. At this point (for this region), we have no reason to question the adequacy of the first-order model. --- # Response surface regression .font60[ ```r library(rsm) #if not installed run: install.packages("rsm") summary(rsm(response~FO(x1, x2), data=example1.data)) ``` ``` ## ## Call: ## rsm(formula = response ~ FO(x1, x2), data = example1.data) ## ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 40.444444 0.057288 705.9869 5.451e-16 *** ## x1 0.775000 0.085932 9.0188 0.000104 *** ## x2 0.325000 0.085932 3.7821 0.009158 ** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Multiple R-squared: 0.941, Adjusted R-squared: 0.9213 ## F-statistic: 47.82 on 2 and 6 DF, p-value: 0.0002057 ## ## Analysis of Variance Table ## ## Response: response ## Df Sum Sq Mean Sq F value Pr(>F) ## FO(x1, x2) 2 2.82500 1.41250 47.8213 0.0002057 ## Residuals 6 0.17722 0.02954 ## Lack of fit 2 0.00522 0.00261 0.0607 0.9419341 ## Pure error 4 0.17200 0.04300 ## ## Direction of steepest ascent (at radius 1): ## x1 x2 ## 0.9221944 0.3867267 ## ## Corresponding increment in original units: ## x1 x2 ## 0.9221944 0.3867267 ``` ] --- # RSM package - The syntax `FO(x1, x2)` fits the first order model in both coded response variables. - The `summary(model)` command generates pertinent information, including: parameter estimates, standard errors, and corresponding t-tests; the analysis of variance table, including a lack-of-fit test; and the direction of steepest ascent with respect to coded and uncoded variables. --- # Steepest ascent ```r steepest(rsm(response~FO(x1, x2), data=example1.data), dist=seq(0, 5, by = 1), descent = F) ``` ``` ## Path of steepest ascent from ridge analysis: ``` ``` ## dist x1 x2 | yhat ## 1 0 0.000 0.000 | 40.444 ## 2 1 0.922 0.387 | 41.285 ## 3 2 1.844 0.773 | 42.125 ## 4 3 2.766 1.160 | 42.965 ## 5 4 3.689 1.547 | 43.806 ## 6 5 4.611 1.934 | 44.647 ``` --- # Steepest ascent Function `steepest` provides predicted response at steps along the path of steepest ascent, stepping from the origin (which is the design center point for coded data) at distances from zero to five in unit increments, showing the location of each step in terms of the coded and uncoded predictor variables. --- # Example 2 Response surface method was utilized to study the effects of anode-cathode separation (factor A) and cathodic current density (factor B) on the standard deviation of copper coating thickness. ```r copper.data1 =read.table("../datasets/copper.txt", header = T) head(copper.data1) ``` ``` ## xA xB s ## 1 9.5 31 5.60 ## 2 9.5 41 6.45 ## 3 11.5 31 4.84 ## 4 11.5 41 5.19 ## 5 10.5 36 4.32 ## 6 10.5 36 4.25 ``` --- # Fit raw data .font60[ ```r library(rsm) model1 = rsm(s~ FO(xA, xB), data= copper.data1) summary(model1) ``` ``` ## ## Call: ## rsm(formula = s ~ FO(xA, xB), data = copper.data1) ## ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 8.823036 2.757839 3.1993 0.01508 * ## xA -0.489383 0.216084 -2.2648 0.05792 . ## xB 0.042375 0.043217 0.9805 0.35950 ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Multiple R-squared: 0.4653, Adjusted R-squared: 0.3125 ## F-statistic: 3.045 on 2 and 7 DF, p-value: 0.1118 ## ## Analysis of Variance Table ## ## Response: s ## Df Sum Sq Mean Sq F value Pr(>F) ## FO(xA, xB) 2 2.27507 1.13753 3.0453 0.11181 ## Residuals 7 2.61473 0.37353 ## Lack of fit 6 2.61228 0.43538 177.7063 0.05736 ## Pure error 1 0.00245 0.00245 ## ## Direction of steepest ascent (at radius 1): ## xA xB ## -0.99627222 0.08626511 ## ## Corresponding increment in original units: ## xA xB ## -0.99627222 0.08626511 ``` ] --- # Conclusions What conclusions can we draw? -- Based on the t-tests, neither main effect is significantly different from zero, indicating either that the experimental region is in the vicinity of the peak, or that neither factor affects the response. -- The lack-of-fit test yields a p-value of 0.05, indicating significant lack-of-fit of the first order model, though the test does not distinguish whether this is due to interaction or quadratic effects. --- # Data coding .font80[ ```r # copper.data2<-coded.data(copper.data1,zA~(xA-10.5)/5, zB~(xB-36)/5) # apply(copper.data2, 2, function(x) length(unique(x))) copper.data2<-coded.data(copper.data1) ``` ``` ## Warning in coded.data(copper.data1): Automatic codings created -- may not be ## what you want ``` ```r head(copper.data2) ``` ``` ## xA xB s ## 1 9.5 31 5.60 ## 2 9.5 41 6.45 ## 3 11.5 31 4.84 ## 4 11.5 41 5.19 ## 5 10.5 36 4.32 ## 6 10.5 36 4.25 ## ## Data are stored in coded form using these coding formulas ... ## x1 ~ (xA - 10.5)/1.414 ## x2 ~ (xB - 36)/7.071 ``` ```r # class(copper.data2) ``` ] --- # Fit coded data .font60[ ```r model2 = rsm(s~ FO(x1, x2), data= copper.data2) summary(model2) ``` ``` ## ## Call: ## rsm(formula = s ~ FO(x1, x2), data = copper.data2) ## ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 5.21000 0.19327 26.9571 2.478e-08 *** ## x1 -0.69199 0.30554 -2.2648 0.05792 . ## x2 0.29963 0.30559 0.9805 0.35950 ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Multiple R-squared: 0.4653, Adjusted R-squared: 0.3125 ## F-statistic: 3.045 on 2 and 7 DF, p-value: 0.1118 ## ## Analysis of Variance Table ## ## Response: s ## Df Sum Sq Mean Sq F value Pr(>F) ## FO(x1, x2) 2 2.27507 1.13753 3.0453 0.11181 ## Residuals 7 2.61473 0.37353 ## Lack of fit 6 2.61228 0.43538 177.7063 0.05736 ## Pure error 1 0.00245 0.00245 ## ## Direction of steepest ascent (at radius 1): ## x1 x2 ## -0.9176670 0.3973504 ## ## Corresponding increment in original units: ## xA xB ## -1.297581 2.809665 ``` ] --- # Why coding transformation? Using an appropriate coding transformation of the data is essential. The way the data are coded affects the results of canonical analysis and steepest-ascent analysis; for example, unless the scaling factors are all equal, the path of steepest ascent obtained by fitting a model to the raw predictor values will differ from the path obtained in the coded units, decoded to the original scale. --- # Why coding tranformation? Using a coding method that makes all coded variables in the experiment vary over the same range is a way of giving each predictor an equal share in potentially determining the steepest-ascent path. Thus, coding is an important step in response-surface analysis. --- # Second-order form - Empirical quadratic model; When `\(f\)` is unknown model is approximated using the 2-term [Taylor series](https://www.youtube.com/watch?v=3d6DsjIBzJ4) to obtain a polynomial of the form: `$$y = \beta_{0} + \beta_{1}x_{1} + \beta_{2}x_{2}+ \beta_{11}x^{2}_{1} + β_{22}x^{2}_{2} + β_{12}x_{1}x_{2} + \epsilon$$` -- This general quadratic equation is quite flexible and with appropriate coefficients it can describe a wide variety of surfaces. -- Unless the function `\(f\)` is known, this equation forms the basis of response surface methods. --- # Requirements A response surface design should provide enough data that will allow estimation of the coefficients. For the general quadratic equation we must have at least 3 levels for each factor to allow estimation of quadratic terms. --- # Example 1 (Second - order) .font60[ ```r summary(rsm(response~SO(x1, x2), data=example1.data)) ``` ``` ## Warning in rsm(response ~ SO(x1, x2), data = example1.data): Some coefficients are aliased - cannot use 'rsm' methods. ## Returning an 'lm' object. ``` ``` ## ## Call: ## rsm(formula = response ~ SO(x1, x2), data = example1.data) ## ## Residuals: ## 1 2 3 4 5 6 7 ## 1.388e-17 6.939e-18 5.362e-18 5.940e-18 -1.600e-01 4.000e-02 2.400e-01 ## 8 9 ## -2.600e-01 1.400e-01 ## ## Coefficients: (1 not defined because of singularities) ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 40.46000 0.09274 436.291 1.66e-10 *** ## FO(x1, x2)x1 0.77500 0.10368 7.475 0.00171 ** ## FO(x1, x2)x2 0.32500 0.10368 3.135 0.03503 * ## TWI(x1, x2) -0.02500 0.10368 -0.241 0.82132 ## PQ(x1, x2)x1^2 -0.03500 0.13910 -0.252 0.81374 ## PQ(x1, x2)x2^2 NA NA NA NA ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 0.2074 on 4 degrees of freedom ## Multiple R-squared: 0.9427, Adjusted R-squared: 0.8854 ## F-statistic: 16.45 on 4 and 4 DF, p-value: 0.009471 ``` ] --- # Example 2 (Second - order) .font60[ ```r model3<-rsm(s~SO(x1, x2), data=copper.data2) summary(model3) ``` ``` ## ## Call: ## rsm(formula = s ~ SO(x1, x2), data = copper.data2) ## ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 4.284997 0.120484 35.5649 3.731e-06 *** ## x1 -0.691988 0.085182 -8.1236 0.0012489 ** ## x2 0.299631 0.085195 3.5170 0.0245158 * ## x1:x2 -0.249960 0.170362 -1.4672 0.2162153 ## x1^2 0.883493 0.159339 5.5447 0.0051745 ** ## x2^2 1.428743 0.159384 8.9642 0.0008569 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Multiple R-squared: 0.9763, Adjusted R-squared: 0.9466 ## F-statistic: 32.88 on 5 and 4 DF, p-value: 0.002409 ## ## Analysis of Variance Table ## ## Response: s ## Df Sum Sq Mean Sq F value Pr(>F) ## FO(x1, x2) 2 2.27507 1.13753 39.1811 0.002359 ## TWI(x1, x2) 1 0.06250 0.06250 2.1527 0.216215 ## PQ(x1, x2) 2 2.43610 1.21805 41.9545 0.002070 ## Residuals 4 0.11613 0.02903 ## Lack of fit 3 0.11368 0.03789 15.4668 0.184283 ## Pure error 1 0.00245 0.00245 ## ## Stationary point of response surface: ## x1 x2 ## 0.38150810 -0.07148575 ## ## Stationary point in original units: ## xA xB ## 11.03945 35.49452 ## ## Eigenanalysis: ## eigen() decomposition ## $values ## [1] 1.4560250 0.8562105 ## ## $vectors ## [,1] [,2] ## x1 -0.2132710 -0.9769931 ## x2 0.9769931 -0.2132710 ``` ] --- # Perspective plots: first order ```r persp(model2, x1~x2, theta = -145, col="blue") ``` <!-- --> --- # Perspective plots: second order ```r persp(model3, x1~x2, theta = -145, col="blue") ``` <!-- --> --- # Contour plots: first order ```r contour(model2, ~ x1 + x2, col="blue") ``` <!-- --> --- # Contour plots: second order ```r contour(model3, ~ x1 + x2, col="blue") ``` <!-- --> --- # Image: first order ```r image(model2, x1~x2) ``` <!-- --> --- # Image: second order ```r image(model3, x1~x2) ``` <!-- --> --- # Summary In a response surface study the primary purpose is not only to understand the mechanism of the underlying relationship between the response and the factors. -- But also more importantly is to develop a prediction equation with the eventual goal of determining the optimum (maximum, minimum or some specific level). -- Therefore, the specific coefficients in the general quadratic model are of less importance.