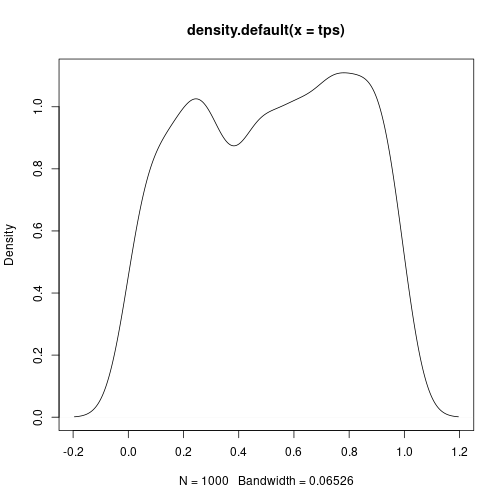

class: center, middle, inverse, title-slide .title[ # Design and Analysis of Experiments ] .subtitle[ ## Overview ] .author[ ### Olga Lyashevska ] .date[ ### 2022-09-23 ] --- # About me .pull-left[ - Lecturer, PhD supervisor at ATU (since 2015) - Research Software Engineer (since 2022) - PhD (Queens University Belfast) - Black belt in Judo and BJJ - Russia - Areas of expertise: Statistical Analysis, Data Analytics, Software Engineering ] .pull-right[ <img src="figs/profilepic.JPG" style="width: 60%" /> ] --- background-image: url(figs/introduce_yourself.png) background-size: contain class: center, bottom, inverse --- class: inverse, center, middle # Ready? - Let's go! .center[] .center[[Module Description](https://modules.atugalwaymayo.ie/en/module/view/6253)] .center[[VLE/Moodle](https://vlegalwaymayo.atu.ie/course/view.php?id=6671)] .center[Lectures: https://lyashevska.nl/teaching] --- # Software Install the **R Programming Language** from [R project home page](https://www.r-project.org/) -- I also recommend to use the [R Studio IDE](https://rstudio.com/products/rstudio/download/), but you do not have to. Any other IDE or CLI will work. -- Read: [R-intro manual](https://cran.r-project.org/doc/manuals/r-release/R-intro.html) -- Other software: Stata, Minitab, SAS, SPSS, but R is free and better suits the purpose. <img src="figs/Rlogo.png" style="width: 45%" /> --- # Pre-requisites You should have had an introductory statistical methods course and be familiar with - t-tests - p-values - confidence intervals - basics of regression - ANOVA -- If you are not sure please revise, for example [here](https://openstax.org/details/books/introductory-statistics) --- # t-tests (1) - One of the most common tests in statistics - Determine whether the means of two groups are equal to each other <sup>*</sup> - The null hypothesis is that the two means are equal, and the alternative is that they are not - We can calculate a t-statistic that will follow a t-distribution with n1 + n2 - 2 degrees of freedom. .footnote[[*] Assumption for the test is that both groups are sampled from normal distributions with equal variances] --- # t-tests (2) ```r x = rnorm(10) y = rnorm(10) t.test(x,y, var.equal=TRUE) ``` ``` # # Two Sample t-test # # data: x and y # t = -0.4067, df = 18, p-value = 0.689 # alternative hypothesis: true difference in means is not equal to 0 # 95 percent confidence interval: # -1.0105019 0.6827222 # sample estimates: # mean of x mean of y # 0.1903158 0.3542057 ``` See explanation [here](https://statistics.berkeley.edu/computing/r-t-tests) --- # p-values (1) **p-value** is used to decide if there's enough evidence to **reject** the null hypothesis. `p-value < 0.05 --> Reject the null hypothesis` --- # What is the behaviour of the p-values? While not necessarily immediately obvious, under the null hypothesis, the p-values for any statistical test should form a uniform distribution between 0 and 1; that is, any value in the interval 0 to 1 is just as likely to occur as any other value. -- Let's look at the density of probability values when the null hypothesis is true: --- # p-values(2) ```r tps = replicate(1000,t.test(rnorm(10),rnorm(10))$p.value) plot(density(tps)) ``` <!-- --> --- # Why p-values are bad? <img src="figs/pvaluebad.png" style="width: 60%" /> [Link](https://www.sciencedirect.com/science/article/pii/S1063458412007789) --- # Linear regression ```r # a boring regression fit = lm(dist ~ 1 + speed, data = cars) coef(summary(fit)) ``` ``` # Estimate Std. Error t value Pr(>|t|) # (Intercept) -17.579095 6.7584402 -2.601058 1.231882e-02 # speed 3.932409 0.4155128 9.463990 1.489836e-12 ``` --- # ANOVA - one-way ANOVA (one variable, several levels) - two-way ANOVA (two variables, several levels) - etc One-way ANOVA determines the existence of a statistically significant difference among several group means of one variable. The test uses variances to help determine if the means are equal or not. --- # ANOVA ```r anova(fit) ``` ``` # Analysis of Variance Table # # Response: dist # Df Sum Sq Mean Sq F value Pr(>F) # speed 1 21186 21185.5 89.567 1.49e-12 *** # Residuals 48 11354 236.5 # --- # Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` -- H0 in ANOVA is always that there is no difference in means. --- # Example A pharmaceutical company conducts an experiment to test the effect of a new cholesterol medication. The company selects 15 subjects randomly from a larger population. Each subject is randomly assigned to one of three treatment groups. Within each treatment group, subjects receive a different dose of the new medication. In Group 1, subjects receive 0 mg/day; in Group 2, 50 mg/day; and in Group 3, 100 mg/day. --- # Syllabus - Design Principles and Hypothesis Testing (**This week**) - Completely Randomized Design (3) - Randomized Block Designs (3) - Factorial Designs (1) - Nested Designs, Split Plot, Repeated Measures (3) - Fractional Factorial Designs (1) - Response Surface Designs (1) - Checking model assumptions (1) **Do not try to grasp it all at once!** .right[] --- # Literature Books: .pull-left[ Design and Analysis of Experiments with R by Lawson. <img src="figs/lawson-book.png" style="width: 60%" /> ] .pull-right[ Design and Analysis of Experiments by Dean, etc. <img src="figs/dean-book.png" style="width: 62%" /> ] --- # Statistics Statistics is defined as the science of collecting, analyzing, and drawing conclusions from data. Data is usually collected through: - sampling surveys - observational studies - experiments. --- # Sampling surveys Used when the purpose of data collection is to estimate some **property** of a **finite** population without conducting a complete census of every item in the population. --- # Observational studies and experiments Used to determine the **relationship** between two or more measured quantities in a **conceptual** population. A conceptual population, unlike a finite population, may only exist in our minds. --- # Differences - In an observational study, data is observed in its natural environment, but in an experiment the environment is controlled. - In observational studies it cannot be proven that the relationships detected are cause and effect even when they are correlated ([see spurious correlation](https://www.tylervigen.com/spurious-correlations)). Correlation does not imply causation. No causation can be established. - In an experiment some variables are changed while others are held constant. In that way the effect that is caused by the change in the variable can be directly observed, and predictions can be made. <span style="background-color: #FFFF00">Causation can be established!</span> --- # Why experiment There are many purposes for experimentation. Some examples include: - determining the cause for variation in measured responses observed in the past; - finding conditions that give rise to the maximum or minimum response; - comparing the response between different settings of controllable variables; - obtaining a mathematical model to predict future response values. --- # Advantages of Experiments 1. Experiments allow us to set up a direct comparison between the treatments of interest. 2. We can design experiments to minimize any *bias* in the comparison. 3. We can design experiments so that the *error* in the comparison is small. 4. We are in control of experiments, and having that control allows us to make stronger *inferences* about the nature of differences that we see in the experiment. -- Specifically, we may make inferences about **causation**. --- # Fields of application Examples: Engineering design, quality improvement, industrial research and manufacturing, basic research in physical and biological science, research in social sciences, psychology, business management and marketing research, and many more. However, the roots of modern experimental design methods stem from R. A. Fisher’s work in agricultural experimentation at the Rothamsted Experimental Station near Harpenden, England. --- # Ethics There are times when experiments are not feasible, even when the knowledge gained would be extremely valuable. For example, we can’t perform an experiment proving once and for all that smoking causes cancer in humans. We can observe that smoking is **associated** with cancer in humans; we have mechanisms for this and can thus **infer causation**. But we cannot demonstrate responsiveness, since that would involve making some people smoke, and making others not smoke. It is simply unethical. --- # Definitions **Experiment** is an action where the experimenter changes at least one of the variables being studied and then observes the effect of his or her actions(s). -- **Experimental unit** is the item under study upon which something is changed. This could be raw materials, human subjects, or just a point in time. -- **Independent Variable** (Factor or Treatment Factor ) is one of the variables under study that is being controlled at or near some target value, or level, during any given experiment. The level is being changed in some systematic way from run to run in order to determine what effect it has on the response(s). --- # Definitions **Dependent Variable** (or the Response) is the characteristic of the experimental unit that is measured after each experiment or run. -- **Effect** is the change in the response that is caused by a change in a factor or independent variable. --- # Purposes of experimental design The use of experimental designs is a prescription for successful application of the scientific method. The scientific method consists of iterative application of the following steps: - observing of the state of nature, - hypothesizing the mechanism for what has been observed, then - collecting data, and - analyzing the data to confirm or reject the hypothesis --- # Types of Experimental Design There are many types. The appropriate one to use depends upon the objectives of the experimentation. We can classify objectives into two main categories: - To study the sources of variability; - To establish cause and effect relationships --- # Objectives, Design and Conclusions <img src="figs/objectives-design-concl.png" style="width: 80%" /> --- # Planning experiment 1. Define Objectives 1. Identify Experimental Units 1. Define a Meaningful and Measurable Response or Dependent Variable 1. List the Independent and Lurking Variables 1. Run Pilot Tests 1. Choose the Experimental Design 1. Determine the Number of Replicates Required 1. Randomize the Experimental Conditions to Experimental Units 1. Describe a Method for Data Analysis 1. Timetable and Budget for Resources Needed to Complete the Experiments. --- # A good experimental design must: - Avoid systematic error - Be precise - Allow estimation of error - Have broad validity and reliability --- # Systematic error Comparative experiments estimate differences in response between treatments. If our experiment has systematic error, then our comparisons will be biased, no matter how precise our measurements are or how many experimental units we use. An everyday example of systematic error: Imagine that your bathroom scale always registers your weight as five pounds lighter that it actually is. --- # Systematic error The amount of systematic error is inversely related to the validity of a measurement instrument: as systematic errors increase, validity falls and vice versa. -- For example, if responses for units receiving treatment one are measured with instrument A, and responses for treatment two are measured with instrument B, then we don’t know if any observed differences are due to treatment effects or instrument miscalibrations. Randomization is the main tool to combat systematic error. --- # Random error Random errors in measurement are inconsistent errors that happen by chance. They are inherently unpredictable and transitory. Random errors include sampling errors, unpredictable fluctuations in the measurement apparatus, or a change in a respondents mood. The amount of random errors is inversely related to the reliability. --- # Systematic error vs Random error <img src=figs/SystematicRandomError.png style="width: 90%" /> Which one is systematic and which one is random? -- ``` Answers: Left: Systematic error Right: Random error ``` -- Error that is systematic is called **bias**. --- # Precision and Accuracy <img src=figs/ValidityReliabilityTarget.png style="width: 90%" /> -- Which one is precise? Which one is accurate? -- ``` Answers: Left: Not accurate, precise Center: Accurate, not precise Right: Accurate, precise ``` -- Experiments are precise when random error in treatment comparisons is small. Precision depends on the size of the random errors in the responses, the number of units used, and the experimental design used. --- # Error estimation Experiments must be designed so that we have an estimate of the size of random error. This permits statistical inference: for example, confidence intervals or tests of significance. We cannot do inference without an estimate of error. --- # Validity The conclusions we draw from an experiment are applicable to the experimental units we used in the experiment. If the units are actually a statistical sample from some population of units, then the conclusions are also valid for the population. -- For example, we compare two different drugs for treating attention deficit disorder. Our subjects are preadolescent boys from a clinic. We might have a fair case that our results would hold for preadolescent boys elsewhere, but even that might not be true. Thus if we wish to have wide validity: broad age range and both genders - then experimental units should reflect the population about which we wish to draw inference. --- # Validity and Reliability <img src=figs/ValidityReliabilityTarget.png style="width: 90%" /> Which one is valid? Which one is reliable? -- ``` Answers: Left: Not valid, reliable Center: Not valid, reliable Right: Valid, reliable ``` --- # Validity and Reliability **Validity** is the degree to which the researcher actually measures what he or she is trying to measure. **Reliability** means that the results obtained are consistent. -- Accurate results are both reliable and valid. --- # Validity vs Precision <img src=figs/ValidityReliabilityTarget.png style="width: 90%" /> Which one is precise and valid? -- ``` Answers: Left: Precise, not valid Center: Not precise, not valid Left: Precise, valid ``` Some compromise will probably be needed. For example, broadening the scope of validity by using a variety of experimental units may decrease the precision of the responses. --- # Roadmap <img src=figs/roadmap.png style="width: 90%" /> --- # Next week <img src=figs/roadmap-crd.png style="width: 90%" /> --- class: center, middle .font200[Thanks!] .font150[Do not forget to install all software *before* the next class and do the reading!]